ReportServer_log.ldf log file size growth: The primary reason for the increase in the size of the report server database is the accumulation of high transaction logs, which occur daily as certain users interact with the report. Therefore, the initial step should be to examine the Power BI Report Server (PBIRS) log files. We will see all the steps which will find the resolution for us.

Client Complain:

We have Implemented Power BI Report Server at a client location and there’s a concern from them regarding the ReportServer_log.ldf log file size growth. When the database admin tried to delete or shrink, the report server crashed. We would like Microsoft approach in solving this problem using best practice methods.

TSG 1?

ReportServer_log.ldf

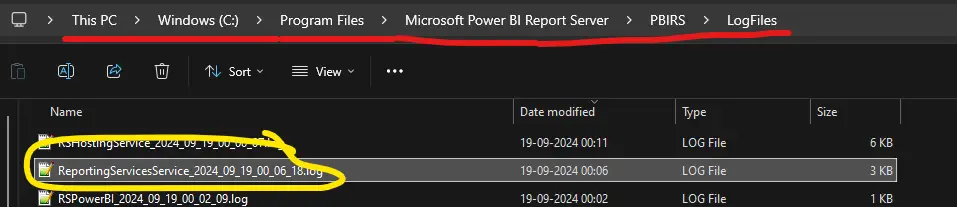

Please go to this log file’s location of PBIRS and check the logs which says “ReportingServerService_timestamp.log” and check the below outcome follows from step 1 to find ReportServer_log.ldf size growth.

Step 1: There could be primary cause for increase in the size of the report server database logs, is the accumulation of high transaction logs, which occur daily as certain users interact with the report. Therefore, the initial step should be to examine the Power BI Report Server (PBIRS) log files.

Note that a cleanup process is scheduled daily at 2 AM system time. Upon reviewing the logs, you will observe one of two outcomes: either the process was successful, or it was not. To confirm you can see these below logs traces:

Failure Traces in log files:

dbcleanup!WindowsService_1!1578!04/19/2024-01:59:59:: i INFO: Expiring old execution log entries

library!WindowsService_1!1578!04/19/2024-02:07:04:: e ERROR: Throwing Microsoft.ReportingServices.Diagnostics.Utilities.ReportServerStorageException: , An error occurred within the report server database. This may be due to a connection failure, timeout or low disk condition within the database.;

dbcleanup!WindowsService_1!1578!04/19/2024-02:07:04:: e ERROR: Expiration of old execution log entries failed: Microsoft.ReportingServices.Diagnostics.Utilities.ReportServerStorageException: An error occurred within the report server database. This may be due to a connection failure, timeout or low disk condition within the database. ---> System.Data.SqlClient.SqlException: Execution Timeout Expired. The timeout period elapsed prior to completion of the operation or the server is not responding. ---> System.ComponentModel.Win32Exception: The wait operation timed outSuccess Traces in log files:

dbcleanup!WindowsService_0!19b0!05/21/2024-01:59:59:: i INFO: Expiring old execution log entries

dbcleanup!WindowsService_0!19b0!05/21/2024-02:00:26:: i INFO: Expiration of old execution log entries is complete. Removed 170612 entries.

dbcleanup!WindowsService_0!19b0!05/21/2024-02:00:26:: i INFO: Cleaned 0 broken snapshots, 0 chunks, there were 0 unsuccessful attempts

dbcleanup!WindowsService_0!19b0!05/21/2024-02:00:26:: i INFO: Cleaned 0 parameters values

dbcleanup!WindowsService_0!19b0!05/21/2024-02:00:39:: i INFO: Cleaned 1554 unused segment mappings.Step 2: If you find that the clean success is not indicated, please proceed to the “executionlogs3” content, and review the entire history from the most recent entry to the earliest by executing this below query on the report server database. If you possess records that date back more than 60 days from today, this could be the main reason for the significant increase in the ReportServer_log.ldf database size.

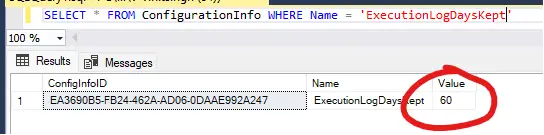

A) Default log file clean should happens IN 60 days.

>>SELECT * FROM ConfigurationInfo WHERE Name = 'ExecutionLogDaysKept’

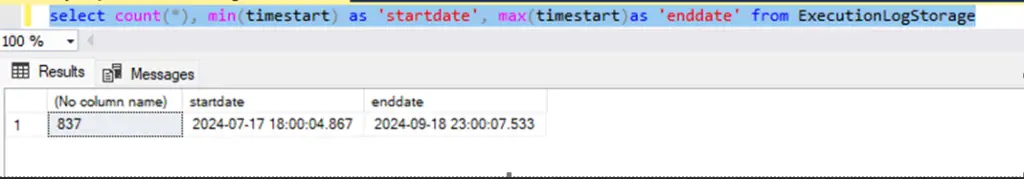

B) But if it looks like this is obviously not happening since the data is still growing from beginning then you have run this below query to check the data history present.>>select count(*), min(timestart) as “startdate”, max(timestart) as “enddate” from ExecutionLogStorage

When you notice an increase in the number of rows or data with start and end range, we need to manually delete the data from “executionlogs3” that is older than 60 days, which could potentially be an issue.

TSG 2?

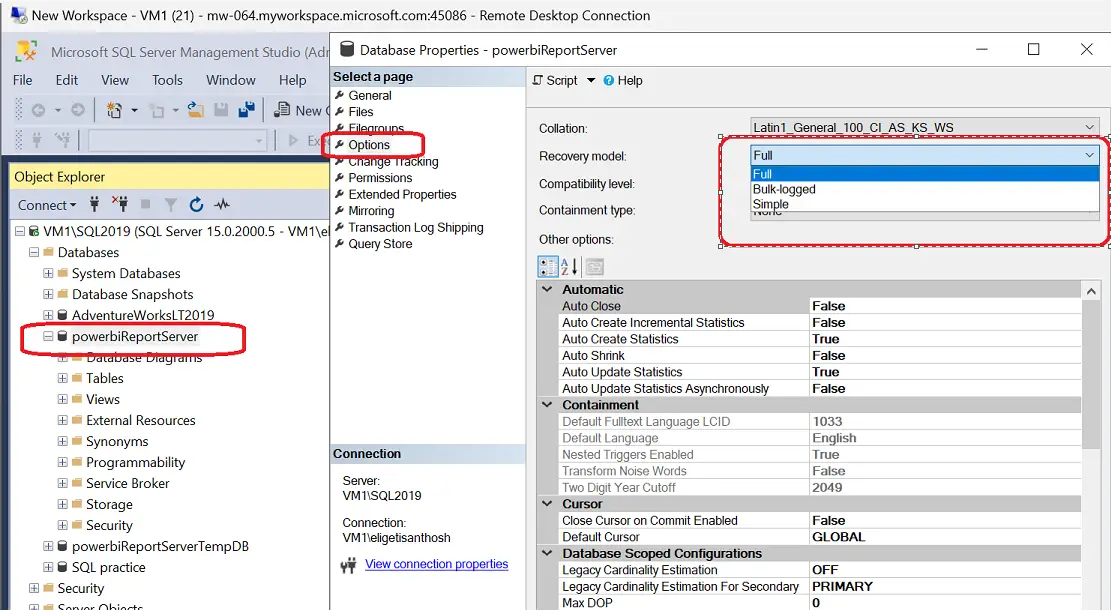

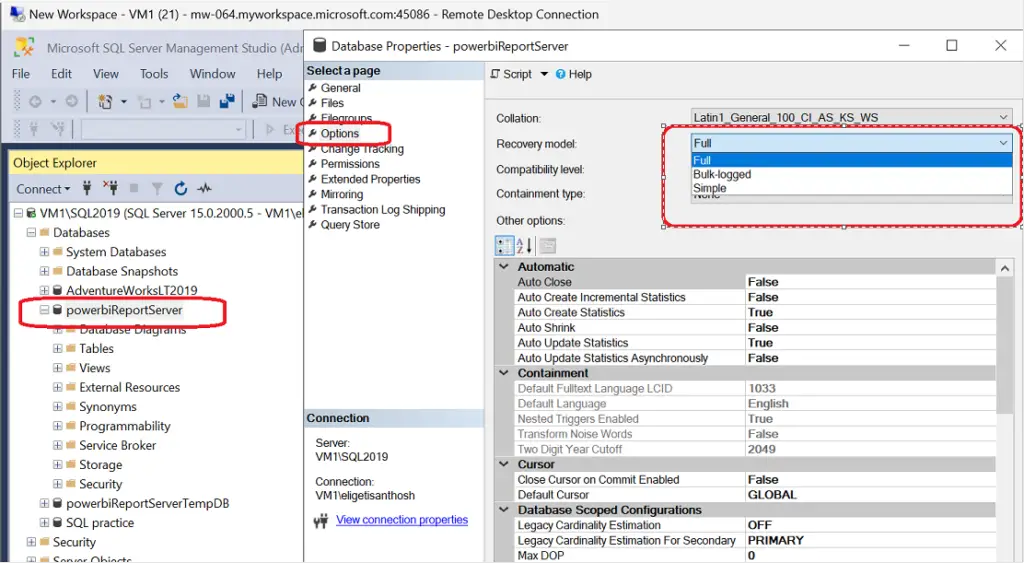

I have seen couple of cases earlier and found out that the issue might be caused by using full recovery model. Please change the recovery model to simple and test the behavior. The log file “ReportServer_log.ldf” size increase is an expected behavior if you are using subscriptions.

If you still want to use the full recovery model you need to back up the file based on your requirement. Please find below screenshot for changing the full recovery model to simple. For more click here!

TSG 3?

Report Server Database Is Growing?

There was issue encountered in Report Server database had grown to more than 2 TB in size and they were trying to figure out why and what was taking up all the space. The largest table in the Report Server database was the Segment table.

The first suspect was Snapshots. Report snapshots are stored in the report server database. The customer said they are not using snapshots but set the number of copies in Report History to 1 to see if they would make a difference but it didn’t. We looked for any reports in the catalog table where Snapshot Limit was set to a number higher than 1 but did not find any. So, at most there would be one snapshot stored for a report. We ran this query to see how many rows in the Snapshot Data table are linked to rows in the History table.

Select *

from SnapshotData SD, History H

Where SD.SnapshotDataID = H.SnapshotDataIDThere were about 60k rows. So, the customer had generated snapshots at one point in time but were no longer doing this. There is no expiration date for report history. The entries will stay until deleted. This is one area the customer can do some clean up.

What do the other 15k rows in the SnapshotData table represent?

I found they link to the catalog table like this

Select *

from SnapshotData SD, Catalog C

Where SD.SnapshotDataID = C.IntermediateWhen a report is deployed to Reporting Services, it is converted to an intermediate format and stored in the database. The following stored procedures are called when a report is deployed.

- InsertUnreferencedSnapshot – Inserts a row in SnapshotData table

- CreateSegmentedChunk – Inserts data into SegmentedChunk table

- CreateChunkSegment – Inserts data into Segment and ChunkSegmentMapping tables

- CreateObject – Inserts a row in the Catalog table

The larger the RDL file the more rows will be added to Segment, SegmentedChunk and ChunkSegmentMapping.

Analysis of the data in the Segment table indicates about 40% of the rows are orphaned rows and could be removed.

So why weren’t the rows being removed?

There is a cleanup process that runs every 10 minutes, but it doesn’t look for orphaned records in these tables. The stored procedures that do the cleanup of orphaned rows are RemoveSegment and RemoveSegmentedMapping. These stored procedures are run at 2 AM as part of the daily cleanup process. Each one takes input parameters that set the maximum number of rows that will be deleted. I collected a SQL profiler trace and saw RemoveSegment is limited to 100 and RemoveSegmentedMapping is limited to 1000. Because the customer is so far behind on cleanup they will never catch up at this rate. I recommended the customer run these stored procedures manually and with larger parameter values to help with the cleanup.

Why was the cleanup so far behind?

Suppose when environment is pretty large. Like having 7 servers in a scale-out deployment. When they run about 15k reports a day and add around 250 new reports each month. The daily cleanup process runs at 2 AM every day. This means the process kick off on all seven servers at the same time and they are all trying to clean up the same tables. When reviewed the report server log files and noticed during the daily clean up that when a cleanup process was delayed a couple of minutes it cleaned up more records than the ones that ran right at 2 AM. It looks like all of the cleanup processes running at the same time were interfering with each other. I recommended, set the cleanup time on each server to a different time. To set the Daily Cleanup to a time other than the default time of 2 AM you add this to the rsreportserver.config.

<Add Key=" DailyCleanupMinuteOfDay " Value="600" />The value is minutes after midnight. This will allow you to spread out the cleanup each server runs so they don’t interfere with each other. This should help Reporting Services keep up with the cleanup.

Here are some SQL queries that can be used to help identify problem areas.

#1) To get row count of each table run this query against the ReportServer database

SELECT

OBJ.Name AS TABLES, IDX.Rows AS ROWS_COUNT

FROM

sys.sysobjects AS OBJ

INNER JOIN

sys.sysindexes AS IDX

ON

OBJ.id = IDX.id

WHERE

type = 'U'

AND

IDX.IndId < 2

ORDER BY

IDX.Rows DESC

GO#2) Query to identify total number of rows that could be cleaned up during daily cleanup. Run against the ReportServer database

select count(*) as Segment

from Segment with (readpast)

where not exists (

select 1 from ChunkSegmentMapping CSM with (nolock)

where CSM.SegmentId = Segment.SegmentId)

select count(*) as SegmentTemp

from [ReportServerTempDB].dbo.Segment with (readpast)

where not exists (

select 1 from [ReportServerTempDB].dbo.ChunkSegmentMapping CSM with (nolock)

where CSM.SegmentId = [ReportServerTempDB].dbo.Segment.SegmentId)

select count(*) as SnapshotData

from SegmentedChunk with (readpast)

where not exists (

select 1 from SnapshotData SD with (nolock)

where SegmentedChunk.SnapshotDataId = SD.SnapshotDataID)

select count(*) as Chunk

from ChunkSegmentMapping with (readpast)

where not exists (

select 1 from SegmentedChunk SC with (nolock)

where SC.ChunkId = ChunkSegmentMapping.ChunkId)

select count(*) as SnapshotDataTemp

from [ReportServerTempDB].dbo.SegmentedChunk with (readpast)

where not exists (

select 1 from [ReportServerTempDB].dbo.SnapshotData SD with (nolock)

where [ReportServerTempDB].dbo.SegmentedChunk.SnapshotDataId = SD.SnapshotDataID)

select count(*) as ChunkTemp

from [ReportServerTempDB].dbo.ChunkSegmentMapping with (readpast)

where not exists (

select 1 from [ReportServerTempDB].dbo.SegmentedChunk SC with (nolock)

where SC.ChunkId = [ReportServerTempDB].dbo.ChunkSegmentMapping.ChunkId)#3) Looks for orphaned records in SegmentedChunk

select * from SegmentedChunk where SnapshotDataId not in (select SnapshotDataID from SnapshotData)#4) Looks for orphaned records in Snapshot data

select COUNT(*)

from SnapshotData

where SnapshotDataID not in

(select Intermediate

from Catalog

where Intermediate is not null

union

select SnapshotDataID

from Catalog

where SnapshotDataID is not null

union

select SnapshotDataID

from History)

and PaginationMode is not null

and PermanentRefcount > 0#5) To mark these records for cleanup run

update SnapshotData

set PermanentRefcount = 0

where SnapshotDataID not in

(select Intermediate

from Catalog

where Intermediate is not null

union

select SnapshotDataID

from Catalog

where SnapshotDataID is not null

union

select SnapshotDataID

from History

)

and PaginationMode is not null

and PermanentRefcount > 0#6) Cleans orphaned records in SegmentedChunk, ChunkSegmentMapping, Segment

A maximum of 20 records will be cleaned up with each run. To increase this number, find all instances of this line delete top (20) and replace 20 with the maximum number you want to use.

begin transaction

declare @cleanedSnapshots table (SnapshotDataId uniqueidentifier) ;

declare @cleanedChunks table (ChunkId uniqueidentifier) ;

declare @cleanedSegments table (ChunkId uniqueidentifier, SegmentId uniqueidentifier) ;

declare @deleteCount int ;

insert into @cleanedSnapshots

select distinct SnapshotDataId

from SegmentedChunk

where SnapshotDataId not in (select SnapshotDataID from SnapshotData)

-- clean up chunks

set @deleteCount = 1 ;

while (@deleteCount > 0)

begin

delete top(20) SC

output deleted.ChunkId into @cleanedChunks(ChunkId)

from SegmentedChunk SC with (readpast)

join @cleanedSnapshots cs on SC.SnapshotDataId = cs.SnapshotDataId ;

set @deleteCount = @@ROWCOUNT ;

end ;

-- clean up unused mappings

set @deleteCount = 1 ;

while (@deleteCount > 0)

begin

delete top(20) CSM

output deleted.ChunkId, deleted.SegmentId into @cleanedSegments (ChunkId, SegmentId)

from ChunkSegmentMapping CSM with (readpast)

join @cleanedChunks cc ON CSM.ChunkId = cc.ChunkId

where not exists (

select 1 from SegmentedChunk SC

where SC.ChunkId = cc.ChunkId )

and not exists (

select 1 from [ReportServerTempDB].dbo.SegmentedChunk TSC

where TSC.ChunkId = cc.ChunkId ) ;

set @deleteCount = @@ROWCOUNT ;

end ;

-- clean up segments

set @deleteCount = 1

while (@deleteCount > 0)

begin

delete top(20) S

from Segment S with (readpast)

join @cleanedSegments cs on S.SegmentId = cs.SegmentId

where not exists (

select 1 from ChunkSegmentMapping csm

where csm.SegmentId = cs.SegmentId ) ;

set @deleteCount = @@ROWCOUNT ;

end

commit TSG’s

- RDCMan Scaling Is Blurry: Fix In 3 Steps (kingfishertechtips.in)

- Port Number For Analysis Services – Find Ports For SSAS? (kingfishertechtips.in)

- SMTP Server In SSRS/PBIRS |Subscribe To Email Problem/Issue (kingfishertechtips.in)

- SQL Server 2022 Download, Install, Connect Using SSMS Tool (kingfishertechtips.in)

- Creating Tabular Project & Deploy Model To Analysis Service (kingfishertechtips.in)

- Deploy Tabular Project To Azure Analysis Service – SSDT Tool (kingfishertechtips.in)

- SSRS/PBIRS – Install And Configure The Report Server On-Prem (kingfishertechtips.in)

- Could Not Load Folder Contents In Portal URLs – SSRSPBIRS (kingfishertechtips.in)

- SSRS/PBIRS – Install And Configure The Report Server On-Prem (kingfishertechtips.in)

- SQL Server 2022 Download, Install, Connect Using SSMS Tool (kingfishertechtips.in)

- Capture Fiddler Trace for Debugging the Network Traffic (kingfishertechtips.in)

- On-Premises Gateway: Configure/Map SQL DB To Power Bi (kingfishertechtips.in)

- Fixed Column/Row Header Make Visible In Any Report (kingfishertechtips.in)

- Self-Signed Certificate: Configure SSRS/PBIRS With SSL (kingfishertechtips.in)

- Create Local User Account/Local Admin User In Windows 10/11 (kingfishertechtips.in)

- Login With AAD/Domain/Microsoft Account To Azure VM’s/RDP (kingfishertechtips.in)